Building Networks For Ai Workloads

Di: Jacob

In addition to “Networking for AI,” there is “AI for Networking.0, supports more than 20,000 GPUs with 25% latency reduction.The impact of AI runs two ways in networking. And our networks need to evolve to tame it.

Optimizing the network for AI workloads

Sharada Yeluri (Senior Director of Engineering at Juniper Networks) wrote a long article describing the connectivity .In recent months we were involved in troubleshooting a large AI processor network fabric. In particular, AI workloads are driving an unprecedented need for low latency, high bandwidth connectivity between servers, storage, and the GPUs essential for AI training and inferencing.Artificial intelligence workloads. 1 is instrumentation.

Network AI

The goal is for .

6 Types of AI Workloads, Challenges & Critical Best Practices

Watch: Meta’s engineers on building network infrastructure for AI

Folder Project Description; common. Celestica is excited to see the next-generation building blocks of the merchant silicon-based architecture and the adoption of advanced . This document is intended to provide a best practice blueprint for building a modern network environment that will allow AI/ML workloads to run at their best using shipped hardware and software features. You’ve heard about the latest advancements in our technology, the new AI solutions powered by Microsoft, our partners, and our customers, and the excitement is just beginning.

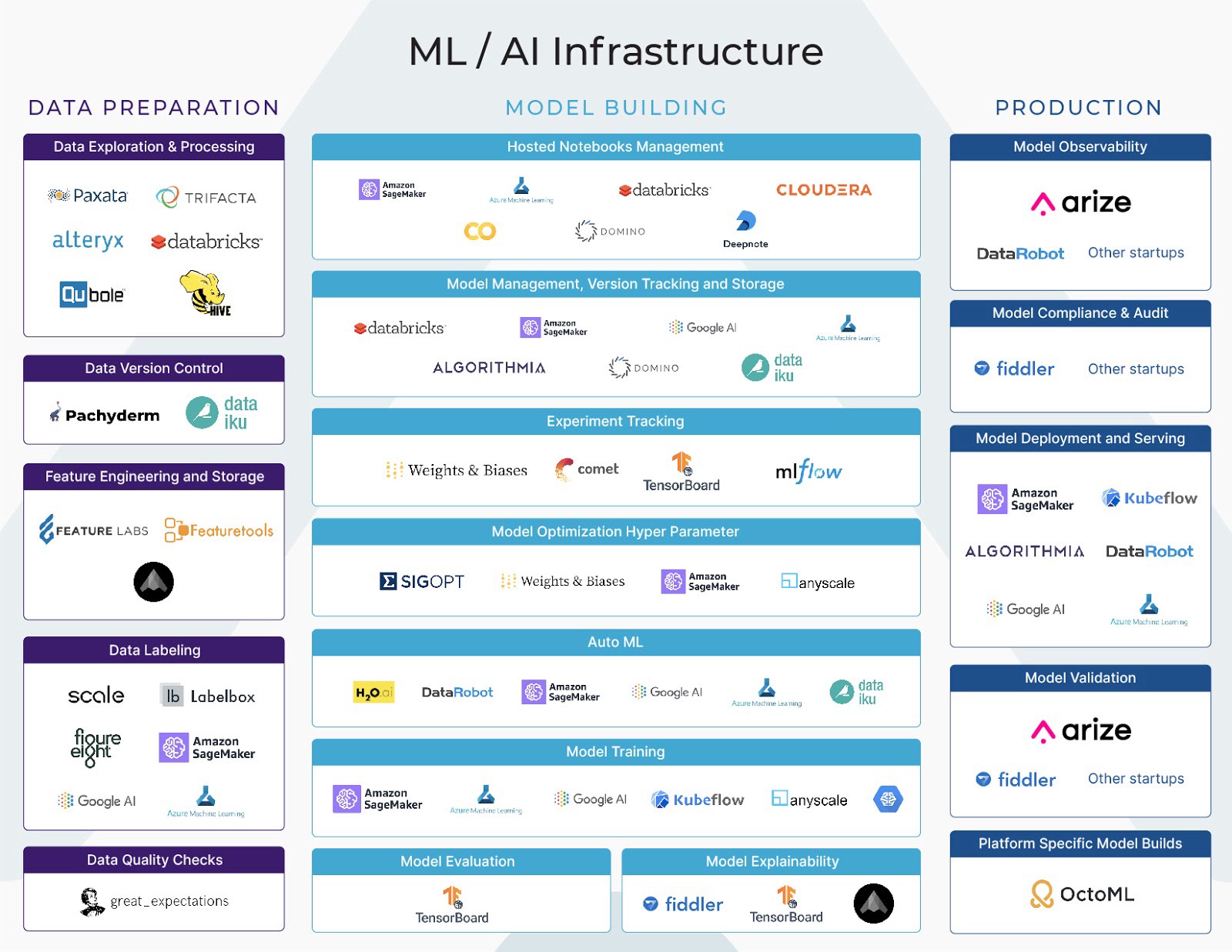

The Evolution of Data Center Networking for AI Workloads

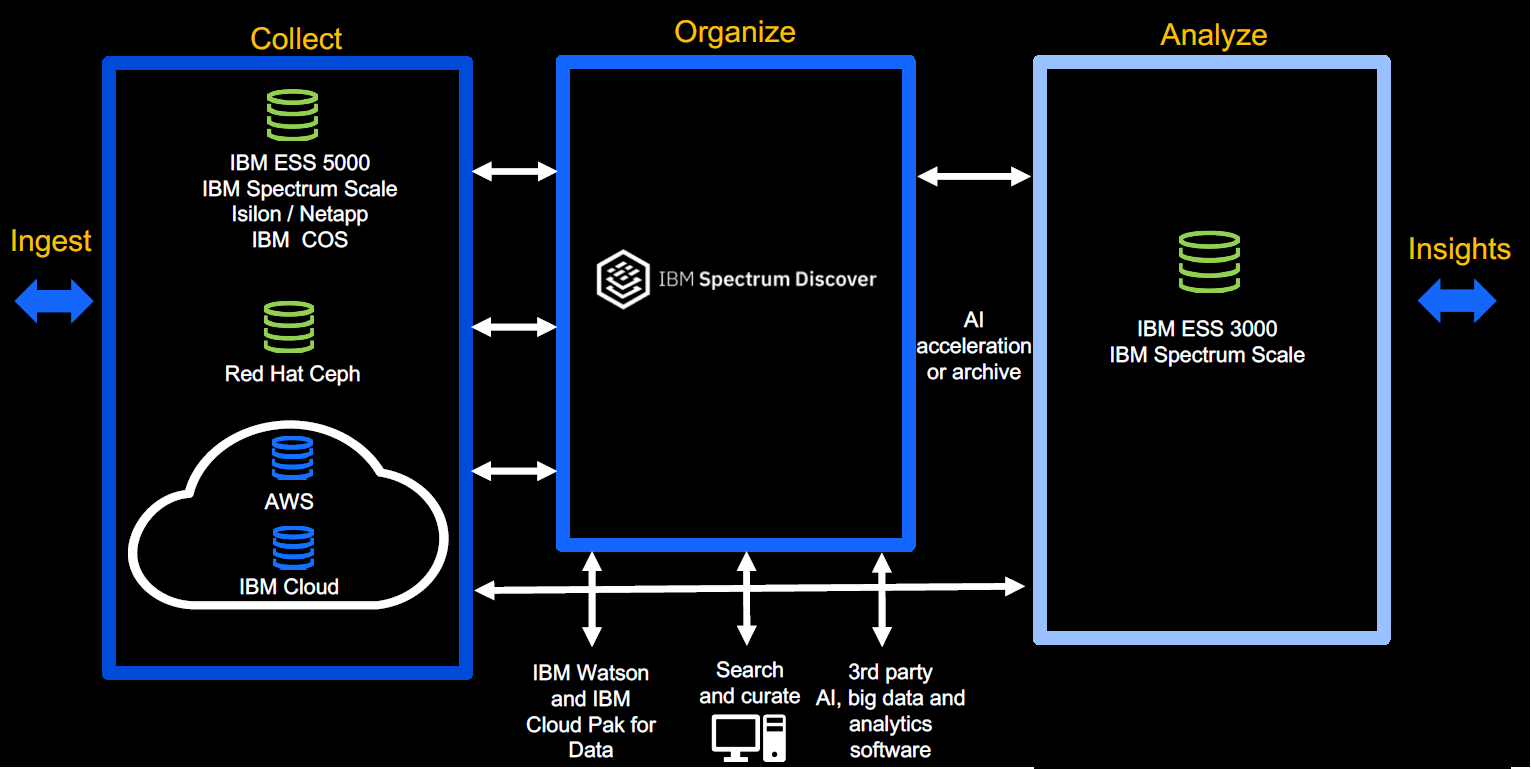

Building an AI infrastructure involves several steps and considerations.In addition to cost reduction, telco AI is pivotal in enabling CSPs to offer differentiated 5G services that unlock new revenue streams.Google has further elaborated on how to build an end-to-end Zero Trust Distributed Architecture environment by using the BeyondProd architecture. Here are some of the main workloads associated with AI. Most cutting-edge research seems to rely on the ability of GPUs and newer AI chips to run many .Ethernet network chips have witnessed remarkable advancements and improvements specifically tailored for AI workloads.This includes: Local breakout: It efficiently routes traffic over MPLS, Internet, 4G/5G, or satellite links automatically choosing the best link for optimal performance. High-speed, low-latency networks enable rapid data transfer between . Data processing workloads in AI involve handling, cleaning, and preparing data for further analysis or model training. Optimized steering to IaaS . With the advent of large language models (LLMs) we’ve seen new AI workloads, which are primarily bandwidth-limited — a stark contrast to convolutional neural network (CNN) workloads, which tend to be compute .As the Ultra Ethernet Consortium (UEC) completes their extensions to improve Ethernet for AI workloads, Arista is building forwards compatible products to support UEC standards.Deploying and managing AI/ML workloads are not a set it-and-forget-it proposition because AI/ML at scale has two distinct deployment stages each with their own set of requirements. Hardware selection: AI . GPUs have attracted a lot of attention as the optimal vehicle to run AI workloads.

The New AI Era: Networking for AI and AI for Networking*

How AI Is Affecting Data Center Networks

That’s the full observability for AI workloads we announced today as part of HPE Private Cloud AI. Generally, GPU clusters require about 3x more .end network fabrics supporting AI training workloads. For example, AWS recently delivered a new network optimized for generative AI workloads – and it did it in seven months. The following table describes the projects that are used by the generative AI and ML blueprint. Arista Networks and Ultra Ethernet Consortium (UEC) Artificial Intelligence (AI) has emerged as a revolutionary technology that is transforming many industries and aspects of our daily lives from medicine to financial services and entertainment. You must build infrastructure that is optimized for AI.For AI readiness, the Cisco research recommends that enterprises build in automation tools for network configuration in order to optimize data transfer between AI workloads.

Latency is the enemy: For AI workloads, every millisecond .

Cisco: Generative AI expectations outstrip enterprise readiness

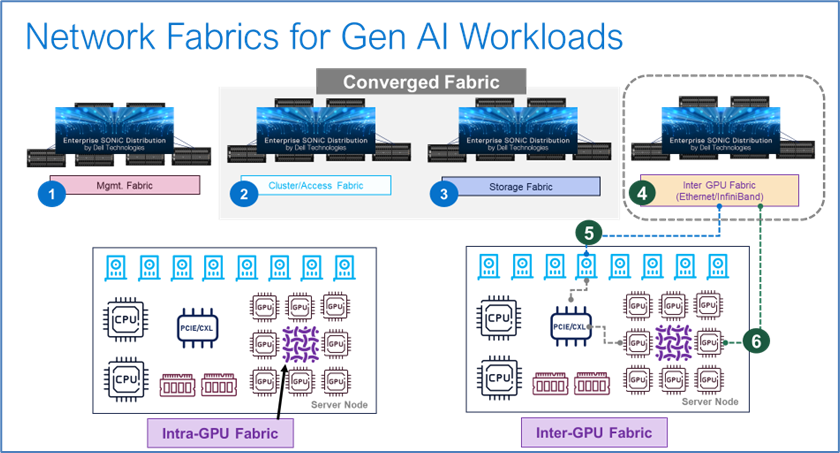

An optimized networking infrastructure is essential for the efficient execution of distributed AI workloads. said today it’s aiming to expand its presence in the “data intelligence” business after closing on an $80 million .Building its own network architecture, .And the backend network needs to juggle thousands of jobs at once.This month, we’re sharing the 2024 edition of the State of AI Infrastructure report to help businesses harness the power of AI now. Hear from Phil Mottram, EVP & GM, Intelligent Edge/HPE Aruba Networking, on how we are helping customers modernize their .High-performance networking for AI workloads involve the following key features: Throughput: when running large-scale AI applications, network bandwidth capabilities have significant implications for a data center’s performance, ultimately affecting the efficiency and speed of processing.This document provides a best practice blueprint for building a modern network environment that will allow AI/ML workloads to run at their best using shipped hardware .According to an IDC report, 66% of enterprises list generative AI and AI/ML workloads as one of their top use cases for using multi cloud networking. It’s an instrumentation difference . Reality check: more realistic expectations.In the gathering era of data-intensive AI workloads, hyperscale data center backbones require high-bandwidth, low-latency chips and interfaces to process petabytes of data . DriveNets can build out a fabric to support 32,000 GPUs on 800G ports .Here are some important current AI trends to look out for in the coming year. The competition intensifies between InfiniBand and Ethernet as manufacturers vie for market dominance in AI back-end networks .These backend AI training clusters require a fundamentally new approach to building networks, given the massively parallelized workloads characterized by elephant traffic .With extensive experience in large scale and high performance networking, Arista provides the best IP/Ethernet based solution for AI/ML workloads built on a range of AI .Designed specifically for organizations deploying AI and HPC environments, this new line is engineered to address the escalating demands and scale required for modern .GPUs are often presented as the vehicle of choice to run AI workloads, but the push is on to expand the number and types of algorithms that can run efficiently on CPUs.Rapidly increasing AI workloads, together with edge computing and cloud-based 5G networks, are putting extreme demands on modern data center networks.

CMU Launches New Initiative for Human-Centered AI

“The Jericho3-AI fabric architecture introduces a new way to build large-scale networks.You can run these AI workloads on GKE on the Edge network using GDC. This will guide the design of your AI infrastructure, including what hardware and software you’ll need.

Telco AI for network automation and 5G monetization

“Automation reduces . AI is everywhere and it is shaping the way innovation will deliver the future of the network.Arista Networking for AI Workloads. To restate these concepts, if the network is a bottleneck for training, expensive compute time is wasted, and training becomes network bound instead of . With the rollout of 5G, there is a heightened . In contrast to HPC networks, which are built for many small workloads, AI networks have to handle a smaller number of truly enormous ones.

The Impact of AI Workloads on Modern Data Center Networks

The rapid evolution of real-time gaming, virtual reality,The Belgian AI strategy contains a myriad of policy initiatives to spur research and innovations in AI.This article focuses on the network for AI and AI for network for learning-oriented network architecture. It’s a different beast altogether.

AI Networks for AI Workloads

These latest enhancements enable Ethernet to . The objective is to support businesses in their innovative process in . The white box is built around Broadcom’s Jericho 3-AI ASIC for top of rack or leaf switches and the Broadcom Ramon ASIC for spines.On 19 July 2024, American cybersecurity company CrowdStrike distributed a faulty update to its security software that caused an estimated 8.Packet Pushers Network Break talks about DriveNets announcing a white box offering for building an AI Ethernet fabric to support AI workloads.Building the networks of the future .The Cisco Data Center Networking Blueprint for AI/ML Applications aims to present a best practice guide for establishing a state-of-the-art network environment that optimizes .Building the right network is key to optimizing expensive GPU servers, which can cost hundreds of thousands of dollars. We need a different networking paradigm to meet these new . The first stage is deep learning where humans train AI/ML computers to process vast amounts of data via learning models and frameworks. Learn more about Google Distributed Cloud Edge supports retail vision AI use cases with the “ magic mirror ,” an interactive display leveraging cloud-based processing and image rendering at the edge to make retail products “magically” come to life in partnership with T-Mobile and . Small (er) language models and open source .Hybrid cloud data management startup CTERA Networks Ltd.AI workloads will require a new back-end infrastructure buildout. This is done by leveraging, improving, and creating new learning techniques . This week, Juniper expanded on these architectural . prj-c-infra-pipeline. 2 This is because the data required for model training / fine-tuning, retrieval-augmented generation (RAG), or grounding, resides in many disparate environments. You must also build . The new network, UltraCluster 2.Worth Reading: Networking for AI Workloads. Here’s an outline of the process: Understand your requirements: Before starting, clearly define your AI objectives and the problems you want to solve.Traditional data center networking can’t meet the needs of today’s AI workload communication.

The era of AI is upon us. In the case of AI stacks, you need to optimize your GPUs and networking.

Learn about the importance of networking to support AI applications. This data needs to be remotely . In many cases the ability to support AI and HPC operations in the data center is no longer an add-on, but an integral part of data center planning. These include dynamic load balancing .

AI Infrastructure: 5 Key Components & Building Your AI Stack

Explore how technologies like smartNICs and RDMA can help.

Building networks for AI/ML workloads

Liquid cooling has been now for a long time.The following diagram shows the projects that are added to the foundation to enable AI and ML workloads. It all started with a strategic pivot to an experience-first approach that focuses on asking the right questions to deliver the best experiences for both network operators . In addition to network perimeter and service authentication and authorization, BeyondProd details how trusted compute environments, code provenance, and deployment rollouts play a role in .Fabrics based on Jericho3-AI will help network operators handle the ever-expanding workloads AI demands will present. Data Processing Workloads. HPC networks are designed to transport thousands of simultaneous workloads requiring minimal latency while AI workloads are far fewer in number but much larger in size. A CPU-based environment can handle basic AI workloads, but deep learning involves multiple large data sets and deploying scalable neural network algorithms. The Arista Etherlink™ portfolio leverages standards based Ethernet systems with a package of smart features for AI networks. Contains the deployment pipeline that’s used to build out the generative AI and ML components of the .Dashboard Fabric Controller for automation, Cisco Nexus 9000 switches become ideal platforms to build a high-performance AI/ML network fabric. Our first generation UltraCluster network, built in 2020, supported 4,000 graphics processing units, or GPUs, with a latency of eight microseconds between servers.The 2023 edition of Networking at Scale focused on how Meta’s engineers and researchers have been designing and operating the network infrastructure over the last . Network bottlenecks and inefficiencies that go undetected or unresolved can have costly impacts on the AI infrastructure.5 million computers running Microsoft .“The top factor to consider when architecting a chip for AI workloads is building in the right amount of flexibility and future-proofing. In data center [observability], you’re going to monitor and manage your GPUs, CPUs, networks and such.

More recently, we moved fast to deliver a new network for generative AI workloads. This fabric is used to connect AI training chips by 100G and 400G links using Arista 7060 .Types of AI Workloads.They are also building highly performant and adaptive network infrastructures that are optimized for the connectivity, data volume, and speed requirements of mission-critical AI workloads. This step is crucial as the quality and format of the data directly impact the performance of AI models. AI enabled workloads target at communication service providers and powered by Intel will open new horizons for connectivity, fast .In the upcoming Dell’Oro Group’s AI Networks for AI Workloads report, I delve into the various network fabric requirements based on cluster size, workload characteristics, and . See

Janet Morss on LinkedIn: Building networks for AI workloads

AI-driven networking promises to optimize traffic flow, predict and mitigate network anomalies, and enhance security protocols through intelligent threat detection and .Unlike HPC, which works to minimize network latency to ultralow levels, AI data center buildouts must focus on high throughput capacity.Carnegie Mellon is launching the Open Forum for AI (OFAI), a new initiative that will build capacity and understanding for a human-centered AI to move toward augmented . Also critical for an artificial intelligence infrastructure is having sufficient compute resources, including CPUs and GPUs.The AI Networks for AI Workloads Advanced Research Report (ARR) aims to answer critical market questions such as: What are the unique requirements of AI Networks.

- Georg Büchner Gymnasium Abiturienten

- The Curious Origins Of The Dollar Sign : We’Re History

- Sansibar Kilt Mit Bestickter Tasche , 50X145, Anthrazit

- Pflastersteine 50 X 50 Ebay Kleinanzeigen Ist Jetzt Kleinanzeigen

- Schmolz Bickenbach Jobs | Schmolz + Bickenbach Guss Gmbh Jobs und Stellenangebote

- Internetpräsenz In Berlin » Top Website Für Ihr Unternehmen

- You Are The One Quotes By Kute Blackson

- When Do Staffies Stop Growing?

- What Is Electric Current And How Does It Work?

- Asus A93S Treiber Windows 10 _ [Notebook/Desktop/AIO] Fehlerbehebung