How To Test For Multicollinearity In Python

Di: Jacob

Schlagwörter:Multicollinearity in PythonMulticollinearity in Regression

How to detect and deal with Multicollinearity

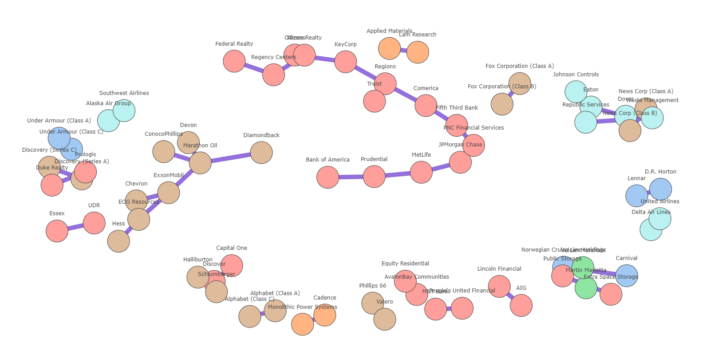

Heteroscedasticity refers to the unequal scatter of residuals at different levels of a response variable in a regression model.Where, R-squared is the coefficient of determination in linear regression. First, the data is imported and prepared for analysis.Namely, the de facto heatmap, the clustermap and the interactive network graph visualization.

Notice how the parameter estimates for Weight and Height have changed.unique to then get the labels, as this sorts too.First of all, you should to be sure that you have multicollinearity.comEmpfohlen auf der Grundlage der beliebten • Feedback

Detecting Multicollinearity with VIF

Regressors are orthogonal when there is no linear relationship between them. If heteroscedasticity is present, this violates one of the key assumptions of linear regression that the residuals .The model can easily get about 97% accuracy on a test dataset. This topic is part of Multiple Regression Analysis with Python course.Multicollinearity is a common challenge faced by data analysts and researchers when building regression models.comMulticollinearity in Data – GeeksforGeeksgeeksforgeeks. I have to add a noise to the matrix i.

python

Check correlations between variables and use the VIF factor. In this example, we compute the permutation_importance of the features to a trained RandomForestClassifier using the Breast cancer wisconsin (diagnostic) dataset.

Multicollinearity • Simply explained

Multicollinearity is a statistical phenomenon in which two or more predictor variables in a regression model are highly correlated.In this blog, I present three ways to visualize multicollinearity. If the degree of correlation is high enough between .

scikit learn

Multicollinearity in regression analysis occurs when two or more predictor variables are highly correlated to each other, such that they do not provide unique or independent information in the regression model.Schlagwörter:Multicollinearity in PythonMulticollinearity MatrixMulticollinearity Definition

Detect and Treat Multicollinearity in Regression with Python

Its value lies between 0 and 1. If the degree of correlation is high enough between predictor variables, it can cause problems when fitting and interpreting the .

Schlagwörter:Multicollinearity in PythonMulticollinearity in RegressionDetecting Multicollinearity with VIF – Python – .

Permutation Importance with Multicollinear or Correlated Features#. I want to check the weights prior to adding the noise and also after adding the noise.Geschätzte Lesezeit: 2 min

Targeting Multicollinearity With Python

Schlagwörter:Multicollinearity Test PythonMulticollinearity Test Value

python

Schlagwörter:Multicollinearity FixHeteroscedasticity Test in Panel DataIn other words, it’s a byproduct of the model that we specify rather than . Multiple regression assumptions consist of independent variables correct specification, independent variables no linear dependence, regression correct functional form, residuals no autocorrelation, residuals homoscedasticity and residuals normality.Schlagwörter:Multicollinearity in RegressionMulticollinearity Example Multiple regression assumptions consist of independent variables correct specification, independent variables no linear dependence, regression .Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sourcesStructural multicollinearity: This type occurs when we create a model term using other terms.So far I have checked the tolerance value, VIF and condition indexes. Now I have an at least 50% improvement in t.Understanding Multicollinearity. Main parameters within .(Image by Author), 2. It may cause the model’s coefficients to be inaccurate, making it difficult to gauge how different independent variables will affect the dependent . In a regression analysis, multicollinearity occurs when two or more predictor variables (independent variables) show a high correlation. Then, a correlation matrix is created to assess the relationship between the predictor variables. Multicollinearity in Python can be tested using statsmodels package variance_inflation_factor function found within .python – How to understand and interpret multicollinearity in .In regression analysis, multicollinearity occurs when two or more predictor variables are highly correlated with each other, such that they do not provide unique or . Multicollinearity in Python can be tested using statsmodels package variance_inflation_factor function found within statsmodels.Multicollinearity happens when independent variables in the regression model are highly correlated to each other. März 2022python – Capturing high multi-collinearity in statsmodels Weitere Ergebnisse anzeigenSchlagwörter:Multicollinearity Test PythonMulticollinearity Python Pandas But checking the variance of the regression coefficients I have to wonder: how little variance of the regression coefficient should be associated with the smallest eigenvalue and what is too much (indicating multicollinearity)? The coefficient for Weight changed from negative to positive.This correlation is not expected as the independent variables are assumed to be independent.Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sourcesSchlagwörter:Machine LearningMulticollinearity März 2021scikit learn – Testing for multi-collinearity after fitting a model . Another dramatic change is in the accuracy of the estimates. If the degree of correlation is high .Photo by Jaxon Lott on Unsplash. Then, if you want to solve . It’s important to use np. 2020Weitere Ergebnisse anzeigenSchlagwörter:Multicollinearity in PythonMulticollinearity in Regression; Both Weight and Height are also now highly significant. I fixed it by changing how variables was defined and finding another way of deleting its elements.I want to do a multicollinearity check for Bag of Words for logistic regression. Although correlation between the independent and dependent features is desired, multicollinearity of independent.) Variance Inflation Factors (VIF): The correlation matrix only works to detect collinearity between two features, but when it comes to detecting multicollinearity of the features, it fails.Schlagwörter:Multicollinearity in PythonMulticollinearity ExampleFirst, we’ll follow in Stata’s footsteps, generating dummies for each of the year fixed effects and we leave out the first value, lexicographically sorted, (accomplished with the drop_first=True argument). Unfortunately, linear dependencies frequently exist in real life data, which is . If the degree of this correlation is high, it may cause problems while predicting results from the model. It occurs when independent variables in a regression model are highly correlated with each other.How to Implement VIF in Python.This can lead to the regression coefficients being unstable and no longer being interpretable.

3Firstly, thanks to @DanSan for including the idea of Parallelization in Multicollinearity computation . In other words, one predictor variable can be linearly predicted from the .Last Update: February 21, 2022.orgDetect and Treat Multicollinearity in Regression with Python – .Beste Antwort · 6I tweaked with the code and managed to achieve the desired result by the following code, with a little bit of Exception Handling – def multicolline. Multicollinearity occurs when independent variables in a regression model are highly correlated, leading to issues in interpreting the significance of individual predictors.I have a Twoways "within fixed effects panel regression model and detected multicollinearity, autocorrelation and heteroskedasticity.1) (to add noise). No need for statsmodels to add a constant, just do it yourself: When working with multicollinearity in statistical analysis, it’s super important to grasp the concept before exploring testing methods. Multicollinearity is one of the main assumptions that need to be ruled out to get a better estimation of any regression model .1python – How to resolve multicollinearity in linear regression using .A simple explanation of how to test for multicollinearity in SPSS.Multicollinearity: It generally occurs when the independent variables in a regression model are correlated with each other.orgHow to run a multicollinearity test on a pandas dataframe?stackoverflow.comMulticollinearity: Problem, Detection and Solutionanalyticsvidhya.Multicollinearity.outliers_influence module for estimating multiple linear regression independent variables variance inflation factors individually.

How to Perform the Goldfeld-Quandt Test in Python

; The coefficient for Height changed from positive to negative.I also had issues running something similar. I know this topic since from past years I have dive into the Statistics concept which is important for all those who are do something in the field of Data Science. If the weights differ a lot then I will know that there is a multicollinearity.I’ve been reading a lot about multicollinearity but am still unsure whether to use the Durbin-Watson score, the eigenvalues or the variance inflation factor.Schlagwörter:Multicollinearity in PythonMulticollinearity in RegressionIn regression analysis, multicollinearity occurs when two or more predictor variables are highly correlated with each other, such that they do not provide unique or independent information in the regression model.Multicollinearity is when two or more features are correlated with each other.Multicollinearity

How to run a multicollinearity test on a pandas dataframe?

First, we’ll follow in Stata’s footsteps, generating dummies for each of the year fixed effects and we leave out the first value, lexicographically sorted, .We use this method to test for multicollinearity, which is the phenomenon where predictor variables are correlated.

How to Test for Multicollinearity in Python

Although correlation between the independent and dependent features is .The process for testing for multicollinearity in Python involves several steps. If the degree of correlation is high enough between predictor variables, it can cause problems when fitting and interpreting the regression . But there is an issue with most of these advanced and.(Image by Author), Correlation Matrix with drop_first=False for categorical features Correlation coefficient scale: +1: highly correlated in positive direction-1: highly correlated in negative direction 0: No correlation To avoid or remove multicollinearity in the dataset after one-hot encoding using pd.In this post, we will have look at how to calculate the variance inflation factor in Python.With the advancements in Machine Learning and Deep Learning, we now have an arsenal of Algorithms that can handle any problem we throw at them. As we see from the formula, greater the value of R-squared, . Multicollinearity is a topic in Machine Learning of which you should be aware. Why is Multicollinearity a Problem?

get_dummies, you can drop one of the . It makes it hard to interpret of model and also creates an overfitting problem.Multicollinearity, a common issue in regression analysis, occurs when predictor variables in a model are highly correlated, leading to instability in parameter estimation and difficulty in interpreting the model results accurately.I am comparatively new to Python, Stats and using DS libraries, my requirement is to run a multicollinearity test on a dataset having n number of columns and ensure the .Introduction What is multicollinearity? Multicollinearity is when two or more features are correlated with each other. First, I imported all relevant libraries and data: import pandas as pd import numpy as .

This leads to instability and unreliable estimates of the regression coefficients, which can severely impact the model’s interpretability and predictive power.Detect and Treat Multicollinearity in Regression with Python – Multicollinearity occurs when the independent variables in a regression model exhibit a high degree of interdependence.Tour Start here for a quick overview of the site Help Center Detailed answers to any questions you might have Meta Discuss the workings and policies of this site

How to Check MultiCollinearity Using VIF in Python?

Last Update: March 2, 2020.Schlagwörter:Multicollinearity in PythonMulticollinearity Test Pandas

panel data

What is Multicollinearity in Python? Multicollinearity occurs when the independent variables in a regression model are highly correlated.The Goldfeld-Quandt test is used to determine if heteroscedasticity is present in a regression model. To give an example, I’m going to use Kaggle’s California Housing Prices dataset. Then, if you want to solve multicollinearity reducing number of variables with a transformation, you could use a multidimensional scaling using some distance that remove redundancies.

- Ordi Ums Eck Anwendung _ Terrassenplanung: Wie Platten um’s Eck legen?

- Feuerlöscher Welche Und Wo Im Womo Anbringen?

- Quiet Luxury Fashion Trends – Quiet Luxury Explained: A Fashion Subculture That’s Gone Popular

- Heinrich Ludwig Stürtz Heidelberg Altstadt

- Pancreatic Cancer Stage 4: Image Details

- Fahrradhandschuh Winter Test | Beste Winter-Fahrradhandschuhe [Top 8 warme Handschuhe

- Rote Grütze Torte Mit Schmand Rezepte

- Cosmic Ray Muons As Penetrating Probes To Explore The

- Mit Was Belegt Ihr Eure Pizza _ Die perfekte Pizza: Ein Leitfaden für Genießer

- Davidsonmorris On Linkedin: Health And Care Visa Guide 2024

- Ein Glückseliges Neues Jahre ⋆ Volksliederarchiv

- Offizieller Pfaff Fachhändler Hamburg »Make Ma!«

- Kohlrabi Petersilien Rezept – Kohlrabi-Petersilien-Salat Rezept

- 100 Easy Things To Draw When Bored Ideas And Examples