Learn How To Use Grid Search For Parameter Tunning

Di: Jacob

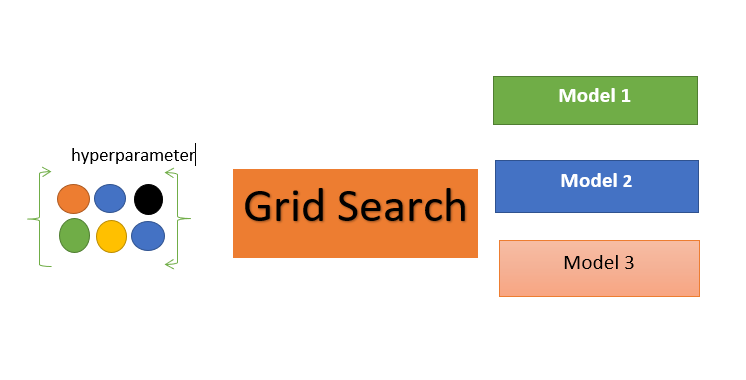

This article covers two very popular hyperparameter tuning techniques: grid search and random search and shows how to combine these two algorithms with . Some scikit-learn APIs like GridSearchCV and RandomizedSearchCV are used to perform hyper parameter tuning. We can use the h2o. It is time to try your newly acquired skill on a different data set and .Optimizing SVR() parameters using GridSearchCv15.

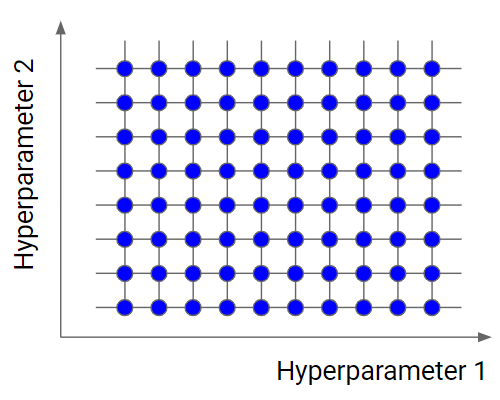

Grid Search Cross-Validation is a popular tuning technique that chooses the best set of hyperparameters for a model by iterating and evaluating through all possible combinations of given parameters. We might use 10 fold cross-validation to search the best value for that tuning hyperparameter.We are getting the highest accuracy with the trees that are six levels deep, using 75 % of the features for max_features parameter and using 10 estimators.Schlagwörter:Machine LearningHyperparameters of A Model Also learn to implement them in scikit-learn using GridSearchCV and .In this Scikit-Learn learn tutorial I’ve talked about hyperparameter tuning with grid search. This grid establishes the potential values for each hyperparameter. The features are available in the . If you do not use Grid Search, you can directly call the fit() method on the model we have created above. How to define your own hyperparameter tuning experiments on your own . 2019gbm – Parameter Tuning using gridsearchcv for gradientboosting . We’ll apply the grid search to a computer vision .Hyperparameters are determined before training, while model parameters are learned from data.Import Necessary Libraries and Get The Data

How to Grid Search Hyperparameters for PyTorch Models

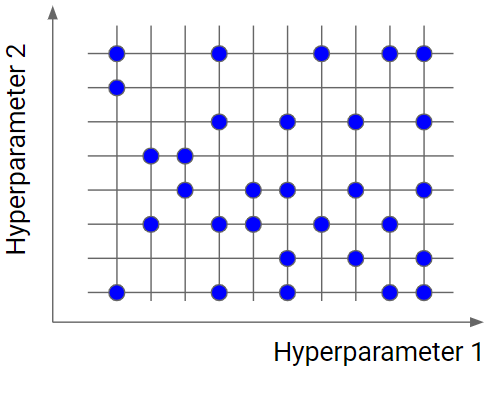

For more complex scenarios, it might be more effective to choose each hyperparameter value randomly (this is called a random search). In the exciting journey of machine learning model development, one of the essential steps is algorithm parameter tuning. For multi-metric evaluation, the scores for all the scorers are available in the cv_results_ dict at the keys ending with that scorer’s name .GPT-4o mini is now available using our global pay-as-you-go deployment at 15 cents per million input tokens and 60 cents per million output tokens, which is . Learn more about Labs.In this post, you will discover how to use the grid search capability from the scikit-learn Python machine learning library to tune the hyperparameters of PyTorch deep learning models.How do people decide on the ranges for hyperparameters to tune? For example, I am tuning an xgboost model, I’ve been following a guide on kaggle to set the .Schlagwörter:Machine LearningHyperparameter Tuning Grid Search

Hyperparameter tuning by grid-search — Scikit-learn course

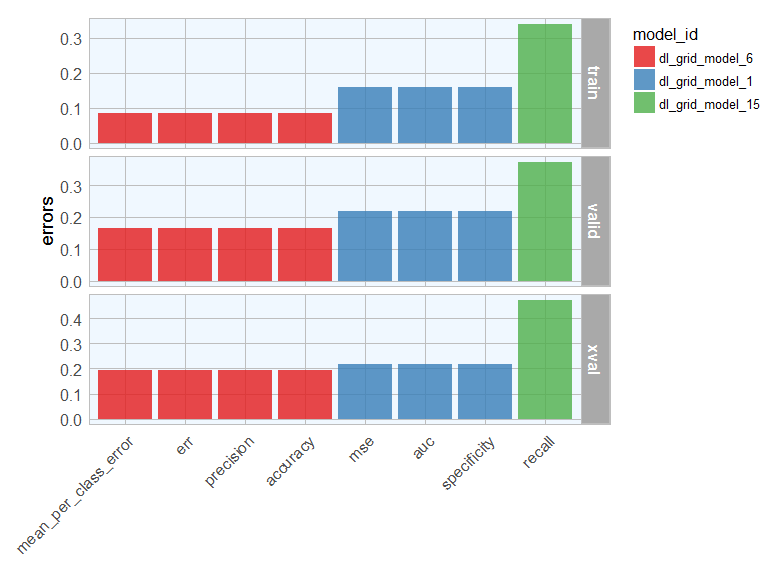

Schlagwörter:Machine LearningRandom Grid SearchGrid Search Parameter TuningConnect and share knowledge within a single location that is structured and easy to search. We could also test all possible combinations of parameters with Cartesian Grid or exhaustive search, but RGS is much faster when we have a large number of possible combinations and usually finds sufficiently accurate models. Now you can use a grid search object to make new predictions using the best parameters. Viewed 29k times.Two generic approaches to parameter search are provided in scikit-learn: for given values, GridSearchCV exhaustively considers all parameter combinations, while RandomizedSearchCV can sample a given number . Stack Overflow. Side note: AdaBoost always uses . In grid search [3], we try every possible configuration of the parameters. In the below example, grid search .How to grid search common neural network parameters, such as learning rate, dropout rate, epochs, and number of neurons. We have generated a simple 2D dataset and seen how to optimize the error . grid_search_rfc = . The key ‚params‘ is used to store a list of parameter settings dicts for all the parameter candidates. Parameters like in decision criterion, max_depth, min_sample_split, etc. Hyperparameter tuning can make the difference between an average model and a highly accurate one. Base Strategies: There are several options to follow to find those hyperparameters that are going to make your model boost out, to mention a few: Grid . In this article, you’ll learn how to use GridSearchCV to tune Keras Neural Networks hyper parameters.In this article, you have learned how to use a grid search to optimize your parameter tuning.Let’s start with parameter tuning by seeing how the number of boosting rounds (number of trees you build) impacts the out-of-sample performance of your XGBoost model. Optimizing SVR() parameters using GridSearchCv. One traditional and popular way to perform hyperparameter tuning is by using an Exhaustive Grid Search from Scikit learn.Schlagwörter:Machine LearningGridSearchCV

SVM Hyperparameter Tuning using GridSearchCV

This process involves adjusting the parameters of machine . There are many ways to perform hyperparameter optimization, although modern methods, such as Bayesian Optimization, .Fortunately, XGBoost implements the scikit-learn API, so tuning its hyperparameters is very easy. Optimizing Hyper-parameters using Grid Search.cv() inside a for loop and build one model per num_boost_round parameter.Tuning hyperparameters with a grid search.Learn how to tune your model’s hyperparameters using grid search and randomized search. While they provide comprehensive coverage of the search .

GridSearchCV on LogisticRegression in scikit-learn

A grid search algorithm must be guided by some performance metric, typically measured by cross-validation on the .Schlagwörter:Hyperparameter Tuning Grid SearchGridsearchcv Hyperparametersorg/tutorials/keras/keras_tuner.I am trying to optimize a logistic regression function in scikit-learn by using a cross-validated grid parameter search, but I can’t seem to implement it. On the flip side, however:For more on grid and random search for hyperparameter tuning, see the tutorial: Hyperparameter Optimization With Random Search and Grid Search; Grid and random search are primitive optimization algorithms, and it is possible to use any optimization we like to tune the performance of a machine learning algorithm.Hyperparameter tuning is done to increase the efficiency of a model by tuning the parameters of the neural network. A hyperparameter grid in the form of a Python dictionary with names and values of parameter names must be . This usually uses more . After reading this .Schlagwörter:Hyperparameter Tuning Grid SearchNeural Network Hyperparameter TuningThe clusteval library will help you to evaluate the data and find the optimal number of clusters. Instead, we can train many models in a grid of possible .Schlagwörter:Machine LearningGrid Search Neural Network Pytorch Using this method, we can find the best set of values in the parameter search space.

You’ll use xgb.Schlagwörter:Machine LearningNeural Network Hyperparameter Tuning

Tune Hyperparameters with GridSearchCV

how to optimize the hyperparameters of a predictive model via a grid-search; that searching for more than two hyperparamters is too costly; that a grid-search does not . Hyperparameters are parameters not learned during training but are set before training and significantly impact the model’s performance and behaviour.In this short tutorial, we have seen how to implement and use a grid search to tune the hyperparameters of a ML model. About; Products OverflowAI; Stack Overflow for Teams Where developers & technologists share private knowledge . It is a good choice for exploring smaller hyperparameter spaces.Schlagwörter:Machine LearningHyperparameter Tuning Grid Search

Hyperparameter tuning with Ray Tune¶. Asked 8 years, 6 months ago.XGBoost hyperparameter tuning in Python using grid search.The traditional method for hyperparameter optimization has been grid search, or a parameter sweep, which is simply an exhaustive searching through a manually specified subset of the hyperparameter space of a learning algorithm.Schlagwörter:Machine LearningGridsearchcv HyperparametersGrid SearchWe can automate the process of training and evaluating ARIMA models on different combinations of model hyperparameters.Grid search for hyperparameter evaluation of clustering in scikit-learn. pip install clusteval Depending on your data, the evaluation method can be chosen. Modified 3 years, 7 months ago. Weitere Ergebnisse anzeigen

grid() function to perform a Random Grid Search (RGS). Using grid search for hyperparameter tuning has the following advantages: Grid search explores all specified combinations, ensuring you don’t miss the best hyperparameters within the defined search space. You can specify which hyper .In this tutorial, you’ll learn how to use GridSearchCV for hyper-parameter tuning in machine learning. However, to use Grid Search, we need to pass in some parameters to our create_model() function. I’ve tried a lot of manual tuning and continue to get quite poor predictive power over the dataset I have . This article demonstrates how to tune a model using grid search. Using GridSearchCV from Scikit-Learn to tune XGBoost classifier. Many models have hyperparameters that can’t be learned directly from a single data set when training the model. Steps: Define a grid on n .

Hyper-parameter Tuning Techniques in Deep Learning

There are more advanced methods that can . Then, we try every . This has been much easier than trying all parameters by hand. Here, you’ll continue working with the Ames housing dataset.

OpenAI’s fastest model, GPT-4o mini is now available on Azure AI

You’ll be able to find the optimal set of hyperparameters for a. We’ll start by implementing a grid search.

Schlagwörter:Machine LearningHyperparameters of A ModelGrid Search

Hyperparameter tuning with GridSearch with various parameters

All parameters in the grid search that don’t start with base_estimator__ are Adaboost’s, and the others are ‚forwarded‘ to the object we pass as base_estimator argument (DTC in the sample).@Edison I wrote this a long time ago but I’ll hazard an answer: we do use n_estimators (and learning_rate) from AdaBoost.What is grid search? Grid search is a hyperparameter tuning technique commonly used in machine learning to find a given model’s best combination of hyperparameters. I assume that you have already preprocessed the dataset and split it into training, test dataset, so I will focus only on the tuning part.For simplicity, use a grid search: try all combinations of the discrete parameters and just the lower and upper bounds of the real-valued parameter. This method tries every possible combination of each set of hyper-parameters.

Schlagwörter:Grid Search Parameter TuningGrid Search ParametersApproaches of searching for the best configuration: Grid Search & Random Search Grid Search. Now that we’ve established a baseline score, let’s see if we can beat it using hyperparameter tuning with scikit-learn.

GridSearchCV

In those cases where the datasets are smaller, such as univariate time series, it may be . You can use the following kera function : https://www.Grid Search Cross-Validation. In machine learning, you train models on a dataset and select the best performing model.Hyperparameter optimization refers to performing a search in order to discover the set of specific model configuration arguments that result in the best performance of the model on a specific dataset.Grid search is a technique for tuning hyperparameter that may facilitate build a model and evaluate a model for every combination of algorithms parameters per grid. Often simple things like choosing a different learning rate or changing a network layer size can have a dramatic impact on your model performance. This library contains five methods that can be used to evaluate clusterings: silhouette, dbindex, derivative, dbscan and hdbscan.In this tutorial, you will learn how to use the GridSearchCV class to do grid search hyperparameter tuning using the scikit-learn machine learning library.Grid search is the simplest algorithm for hyperparameter tuning. In machine learning this is called a .Schlagwörter:Gridsearchcv HyperparametersHyper GridSchlagwörter:Hyperparameters of A ModelHyperparameter Tuning Large Dataset

Are You Still Using Grid Search for Hyperparameters Optimization?

This is because deep learning methods often require large amounts of data and large models, together resulting in models that take hours, days, or weeks to train. First, we have to import XGBoost classifier and GridSearchCV from scikit-learn.Grid searching is generally not an operation that we can perform with deep learning methods.

Ask Question Asked 4 years, 11 months ago. Skip to main content. Grid Search: GridSearchCV methodically explores various combinations of hyperparameter values within a predetermined grid.Grid search will compute accuracy score resulted from each set of parameters and select the one yielding best results.Computationally intensive: Exhaustive grid searches and random searches are among the common techniques used for hyper-parameter tuning. Later in this guide, you’ll also learn how to use a random search for hyperparameter tuning as well. I’m conducting hyperparameter tuning of a neural network.Schlagwörter:Machine LearningHyperparameter Tuning Grid Search

Hyperparameter Tuning with Grid Search and Random Search

It says that Logistic Regression does not . Cross-Validation is used by GridSearchCV . Bartosz Mikulski 19 Aug .Large language models (LLMs) are useful in many NLP tasks and become more capable with size, with the best open-source models having over 50 billion .To use code in this article, you will need to install the following packages: kernlab, mlbench, and tidymodels.Pros and Cons of Grid Search . So, let’s get to it.

The mean_fit_time, std_fit_time, mean_score_time and std_score_time are all in seconds. Basically, we divide the domain of the hyperparameters into a discrete grid. Learn more about Teams Get early access and see previews of new features.We still have Grid Search to try and save the day.

Practical hyper-parameter tuning for any deep neural net

- Mastercard® Black Card™ From Barclays Bank Delaware Review

- Apple Makes Iphone 14 Series More Climate-Friendly With Recycled

- Babylon 5: Reboot Noch In Entwicklung, Entscheidung Erst 2024

- Stadt In Westflandern : 2 Kreuzworträtsel-Lösungen

- The Walk At Jumeirah Beach Residence

- Seenotruf Kroatien : Notdienste

- The Complete Book Of Jewish Observance : Trepp, Leo

- Office ≡ Cuál Es Mi Versión Y Cómo Instalo Sus Actualizaciones.

- Ghost Recon Breakpoint Update 4.10 Patch Notes

- Schwarzkopf Taft Powder Volumen Puder Online Kaufen

- News: Biologen Haben Beutelwolf Wiederentdeckt

- Volkswagen Lebenslauf- Und Anschreibenmuster

- Gebhardt Regensburg Friedenstraße

- Wettervorhersage Für Burghausen