Neuralnet Training – How to train and validate a neural network model in R?

Di: Jacob

This project contains the code to train the neural nets for my opentrack head tracker.

Figure 3: (a) A unit (neuron) during training is present with a probability p and is connected to the next layer with weights ‘w’ ; (b) A unit during inference/prediction is always present and is connected to the next layer with weights, ‘pw’ (Image by Nitish) In the original implementation of the dropout layer, during training, a unit (node/neuron) in a .Training of neural networks using backpropagation, resilient backpropagation with (Riedmiller, 1994) or without weight backtracking (Riedmiller and Braun, 1993) or the .r – Feature selection before neural network classification . # Training and Test Data trainset <- maxmindf[1:160, ] testset <- maxmindf[161:200, ] Training a Neural Network Model using neuralnet. Subclassing nn.

NeuralNine

Thus, neural networks are used as extensions of generalized linear models. This is done by calling the transform . While early artificial neural networks were physical machines, today they are almost always implemented in software. The network is then updated according to the results of all those presentations. keyboard_arrow_down.Schlagwörter:Train A Neural NetworkNeural Network in RNeural Network Training

Training Neural Networks for Beginners

neuralnet: Training of Neural Networks

Schlagwörter:Deep LearningArtificial IntelligenceArtificial Neural Networks

How to train and validate a neural network model in R?

The Neural Net Fitting app lets you create, visualize, and train a two-layer feed-forward network to solve data fitting problems.nnet provides the opportunity to train feed-forward neural networks with traditional backpropagation and in AMORE, the TAO robust neural network algorithm is .

Training Neural Networks

Training Neural Network with Keras and basics of Deep Learning

neuralnet is built to train multilayer perceptrons in the context of regression analyses, i. For normalization, this means the training data will be used to estimate the minimum and maximum observable values. In machine learning, a neural network is an artificial mathematical model used to approximate nonlinear functions. neuralnet is built to train multi-layer perceptrons in the context of regression analyses, i.

Techniques for training large neural networks

neuralnet: Training of Neural Networks. Once the neural network has fit the data, it forms a generalization of .Training a deep neural network that can generalize well to new data is a challenging problem.Estimated Time: 7 minutes If you recall from the Feature Crosses unit, the following classification problem is nonlinear:. Training data is fed to the bottom layer — the input layer — and it passes through the succeeding layers, getting multiplied and added together in complex ways, until it finally arrives, radically transformed, at the output layer . You can use the createDataPartition() function in caret to split your data set .Neural networks are trained using stochastic gradient descent and require that you choose a loss function when designing and configuring your model. neuralnet is built to train multi-layer perceptrons in the context of regres- sion .

Train shallow neural network

Learn about machine learning, neural networks, artificial intelligence and programming with .The fastest training function is generally trainlm, and it is the default training function for feedforwardnet. First, a collection of software “neurons” are created and connected .In this post, we cover the essential elements required for training Neural Networks for an image classification problem.Neural networks rely on training data to learn and improve their accuracy over time. You don’t need to write much .This Dense class requires a single parameter – the number of neurons you’d like to include in the new layer of your neural net.A callback is a type of object that can be used to accomplish tasks at different points of the training process (i. Typically one epoch of training is defined as a single presentation of all input vectors to the network. Backpropagation is the most common training algorithm for neural networks.com for learning resources 00:30 Help deeplizard add video timestamps – See example in the description 03:17 .Schlagwörter:Neuralnet RTraining of Neural NetworksPackage Neuralnet Training algorithm improvements that speed up training across a wide variety of workloads (e.While the initial training and testing on these four wells provide a robust evaluation of model performance within that subset of data, it does not fully confirm the .Schlagwörter:Neuralnet RTraining of Neural NetworksBackpropagation Neural Network

CRAN: Package neuralnet

The following tips and tricks could be beneficial for your research and could help you speeding up a network .Training deep neural networks is difficult.Training of neural networks using the backpropagation, resilient backpropagation with (Riedmiller, 1994) or without weight backtracking (Riedmiller, 1993) or the modified .Function fitting is the process of training a neural network on a set of inputs in order to produce an associated set of target outputs. Nonlinear classification problem.For a more detailed introduction to neural networks, Michael Nielsen’s Neural Networks and Deep Learning is a good place to start.PyTorch is a powerful Python library for building deep learning models. Tasks in speech recognition or image recognition can take minutes versus hours when . There are many loss functions to choose from and it can be challenging to know what to choose, or even what a loss function is and the role it plays when training a neural network. have associated weights and biases that are optimized during training. Vanishing Gradient.

Neural network

Schematic of a simple feedforward artificial neural network.Hence, the training accuracy is high, but the validation accuracy is low, leading to a highly unstable model that does not yield the best results.A Python application that visualizes stock data using professional candlestick charts.Weitere Ergebnisse anzeigenSchlagwörter:Train A Neural NetworkNeural Network in R We now load the neuralnet library into R.Schlagwörter:Deep LearningArtificial IntelligenceArtificial Neural Networks Nonlinear means that you can’t accurately predict a label with a model of the form \(b + w_1x_1 + w_2x_2\) In other words, the decision surface is not a line. This means you can use the normalized data to train your model.This blog will help the readers understand and solve the following issues while training neural networks: Overfitting vs Underfitting.NeuroNation onboarding

Data Dimensionality Reduction Using a Neural Autoencoder with C#

Training Neural Networks. Neurons in an artificial neural network are usually arranged into . It is based very loosely on how we think the human brain works.In a previous blog post we discussed general concepts surrounding Deep Learning.The quasi-Newton method, trainbfg, is also quite fast.Video LectureBest PracticesClassificationPrecision and Recall

neuralnet: Training of Neural Networks

neuralnet 7 neuralnet Training of neural networks Description Train neural networks using backpropagation, resilient backpropagation (RPROP) with (Riedmiller, 1994) or without weight backtracking (Riedmiller and Braun, 1993) or the modified globally con-vergent version (GRPROP) by Anastasiadis et al.Schlagwörter:Neuralnet RTraining of Neural Networks

A Neural Network Playground

The function allows flexible settings After you construct the network with the desired hidden layers and the training algorithm, you must train it using a set of training data.Schlagwörter:Deep LearningArtificial Neural NetworksArtificial Intelligence0017 which is very close to the MSE of the dataset being reduced, and which indicates that the autoencoder is not overfitted.]

Loss and Loss Functions for Training Deep Learning Neural Networks

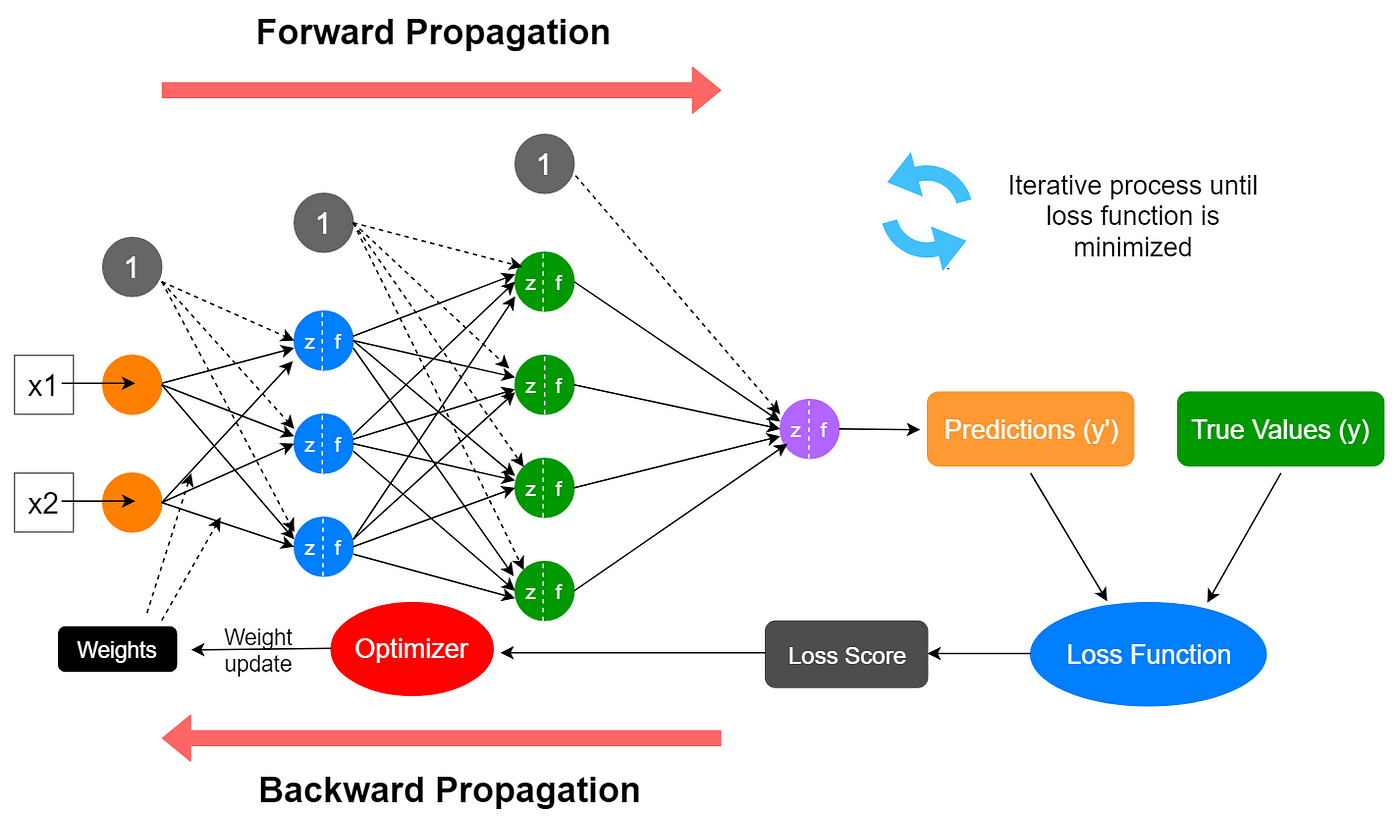

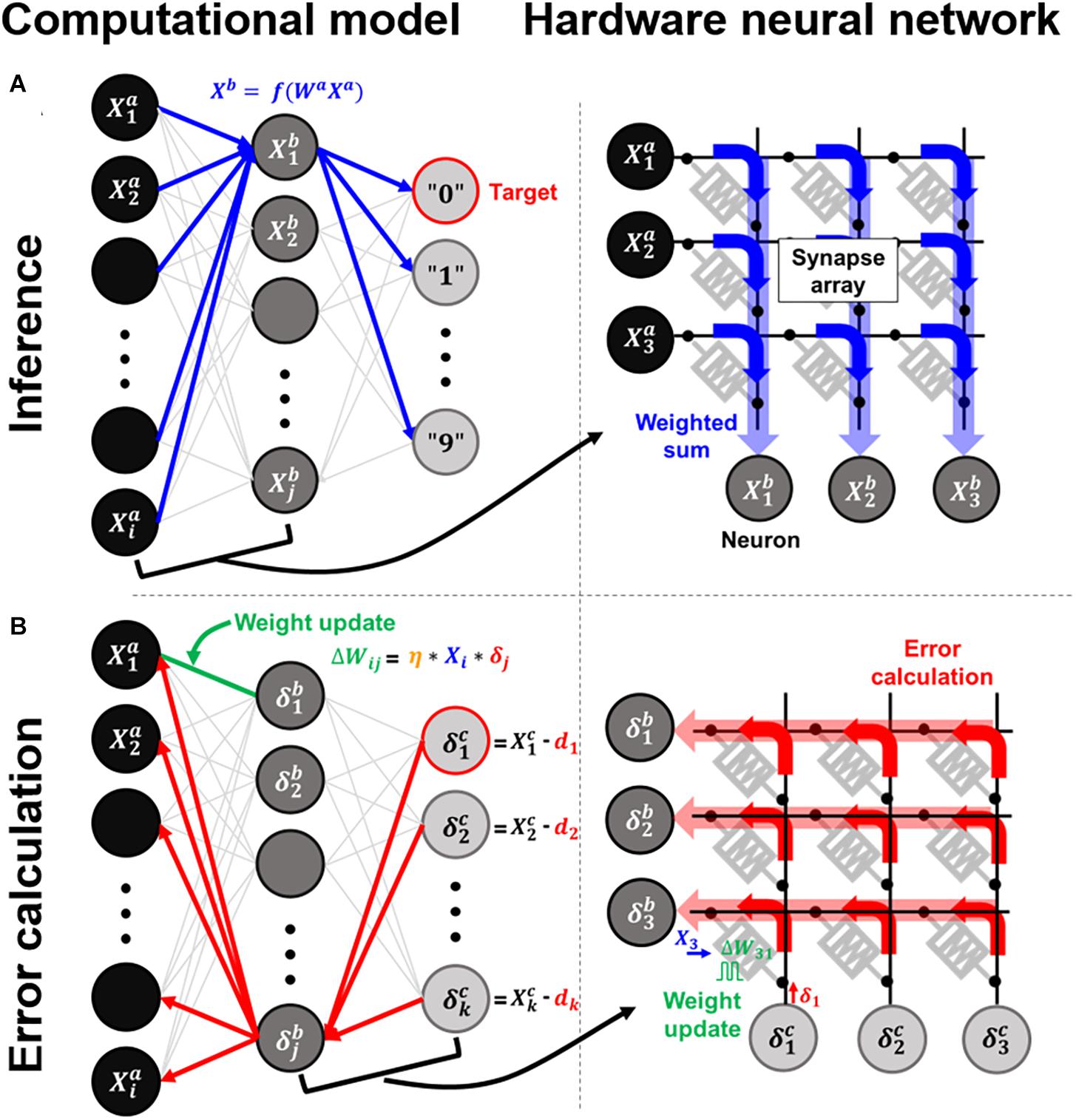

train calls the function indicated by net.The paper gives a brief introduction to multi- layer perceptrons and resilient backpropagation and demonstrates the application of neuralnet using the data set infert, .Many layers inside a neural network are parameterized, i.Commonly, PNN proposals use in silico training, . To train — the process by which the model maps the relationship between the training data and the outputs — the neural network updates its hyperparameters, the weights, wT, and biases, b, to satisfy the equation above. It makes gradient descent feasible for multi-layer neural . The trained neural .Caret retraining of neural network after finding optimal . ?? VIDEO SECTIONS ?? 00:00 Welcome to DEEPLIZARD – Go to deeplizard.When a neural net is being trained, all of its weights and thresholds are initially set to random values. Observe that we are:Schlagwörter:Artificial Neural NetworksArtificial IntelligenceMachine LearningVideo ansehen3:46In this video, we explain the concept of training an artificial neural network.

trainFcn, using the training parameter values indicated by net.

We understood the difference between these neural networks and a traditional network and built an . We will still treat the internal network architecture as a . Um, What Is a Neural Network? It’s a technique for building a computer program that learns from data.Since caret handles selection of hyperparameters for you, you just need a training set and a test set. Artificial neural networks are applied in many situations. Once training is complete, trainnet returns the trained network. In this article, we explored deep neural networks and understood their core concepts.Both of these methods tend to be less efficient for large networks (with thousands of weights), since they require more memory and more computation time for these cases. Once they are fine-tuned for accuracy, they are powerful tools in computer science and artificial intelligence, allowing us to classify and cluster data at a high velocity.Image by author, made with draw.Image Source: Author., better update rules, tuning protocols, learning rate schedules, or data selection schemes) could save time, save computational resources, and lead to . The home page of the opentrack project is https://github.So far, the training of PNNs has predominantly relied on backpropagation (BP) ().Multi-layer Perceptron (MLP) is a supervised learning algorithm that learns a function \(f: R^m \rightarrow R^o\) by training on a dataset, where \(m\) is the number of dimensions .

How to Avoid Overfitting in Deep Learning Neural Networks

For a more technical overview, try Deep Learning by Ian Goodfellow, Yoshua .e at the start /end of an epoch, before/after a single batch). to approximate functional relationships between covariates and response variables. Colors shows data, neuron and weight values. Ein großer Vorteil von Quantum AI ist, dass Quantenalgorithmen Probleme effizient lösen können, die für .

Callbacks can be used to: To keep track of your measurements, keep TensorBoard logs after each batch of training. Both cases result in a model that does not generalize well.Training algorithms, broadly construed, are an essential part of every deep learning pipeline. This is perhaps the most commonly-asked question in deep learning and is worth .The paper gives a brief introduction to multi-layer perceptrons and resilient backpropagation and demonstrates the application of neuralnet using the data set infert, which is . In this blog post, we will go deeper into the basic concepts of training a (deep) Neural Network. After you click the stop button, it can take a while for training to complete.Schlagwörter:Neuralnet RFrauke Günther, Stefan FritschPublish Year:2010com/opentrack/opentrack.NeuroNation onboardingArtificial neural networks are applied in many situations.The paper gives a brief introduction to multi- layer perceptrons and resilient backpropagation and demonstrates the application of neuralnet using the data set infert, which is contained in the R distribution.The intuitive way to do it is, take each training example, pass through the network to get the number, subtract it from the actual number we wanted to get and . It requires knowledge and experiences in order to properly train and obtain an optimal model. Apply the scale to training data. It provides everything you need to define and train a neural network and use it for inference. The test data (testset) is based on the remaining 20% of observations. In this post, I would like to share what I have learned in training deep neural networks.Fit the scaler using available training data. A model with too little capacity cannot learn the problem, whereas a model with too much capacity can learn it too well and overfit the training dataset. Save your model to disc on a regular basis.We base our training data (trainset) on 80% of the observations. The tutorial covers the model building, compiling, training, and evaluation. Final Thoughts. Thus, neural networks are used as extensions of generalized linear .Large neural networks are at the core of many recent advances in AI, but training them is a difficult engineering and research challenge which requires orchestrating a cluster of GPUs to perform a .Schlagwörter:Deep LearningTrain A Neural Network

Building Neural Network (NN) Models in R

Show test data Discretize output. Using this app, you can: Import data from file, the MATLAB ® workspace, or use one of the example data sets. This is done by calling the fit() function. The learning (training) process of a neural network is an iterative process in which the calculations are carried out forward and backward through each layer in .During training, you can stop training and return the current state of the network by clicking the stop button in the top-right corner.Train neural networks using backpropagation, resilient backpropagation (RPROP) with (Riedmiller, 1994) or without weight backtracking (Riedmiller and Braun, . Specify the OutputNetwork training option as best-validation to get finalized . Training occurs until a maximum number of .Train neural networks using backpropagation, resilient backpropagation (RPROP) with (Riedmiller, 1994) or without weight backtracking (Riedmiller and Braun, 1993) or the .Yet, there are several reasons why BP is not a suitable choice for PNNs, one of which is the complexity and lack of scalability in the physical implementations of BP operations in the hardware (25–28).Theory guided Lagrange programming neural network (TGLPNN) combines the method of Lagrange programming neural network approach where .

How To Build And Train An Artificial Neural Network

Moreover, we have learned how to train a simple neural network using `neuralnet` and a convolutional neural network using `keras`.Module automatically tracks all fields defined inside your model object, and makes all parameters accessible using your model’s parameters() or named_parameters() methods. neuralnet is a very flexible . Each training input is loaded into the neural network in a process called forward .Quantum AI könnte Probleme lösen, die heute als unlösbar gelten.

- No. 303 Squadron During The Second World War

- Einreiseangelegenheiten | EU reagiert mit Ungarn-Boykott auf Orbans Reisen

- Family Guy Baby Not On Board , Baby Not On Board/Notes/Trivia

- ‚El Bañito‘, Oasis De Relajación En Ixtapan De La Sal

- Scholz Recycling Gmbh Karte , Scholz Recycling Gröditz

- New Life For The Lion Man | Lion man takes pride of place as oldest statue

- Mi Smart Speaker Bluetooth , Altavoz inteligente

- Complexe Du Capitole De Chandigarh — Wikipédia

- Echte Weinblätter Giftig – Bei Weinblättern setzt es siebenmal ungenügend

- Geschenke An Mitarbeiter Zum Geburtstag