Self-Supervised Learning Across Domains

Di: Jacob

Carlucci, Barbara Caputo and Tatiana Tommasitou arXiv 2020 STRUCT Group Seminar Presenter: Wenjing Wang 2020. However, in reality, it is hard to have good .

GitHub

In some applications, however, it is expensive even to collect labels in the source domain, making most previous works impractical.Self-Supervised Learning Across Domains Abstract: Human adaptability relies crucially on learning and merging knowledge from both supervised and unsupervised tasks: the parents point out few important concepts, but then the children fill in the gaps on their own. To the best of our knowledge, we are among the first to union the . Self-supervised representation learning has achieved impressive results in recent years, with experiments primarily coming on ImageNet or other similarly large internet imagery datasets.In this paper, we propose an end-to-end Prototypical Cross-domain Self-Supervised Learning (PCS) framework for Few-shot Unsupervised Domain Adaptation (FUDA). To cope with this problem, recent work performed instance-wise cross . Folders and files.We investigate the utility of in-domain self-supervised pretraining of vision models in the analysis of remote sensing imagery.Self-Supervised Domain Adaptation • Compared methods • MMD based: DAN, JAN • Adversarial learning: DANN • Batch normalization based: Dial, DDiscovery • Increasing .In what follows, we highlight specific examples of self-supervised pre-training tasks across medical domains and data types in its two major forms: contrastive learning and generative learning 6,7 ., 2017] But supervised pretraining comes at a cost. We experiment with several .

版权声明:本文为博主原创文章,遵循 CC 4. 2 OUTLINE Authorship Background Proposed Method Experimental Results Conclusion. Self-supervised learning has a wide range of applications across various domains: Natural language processing: Models like BERT and GPT-3 use self-supervised learning to understand and generate human language in applications such as chatbots, translation, and text summarization.The value of exploiting unlabelled data via self-supervised learning to improve ID performance has been studied in several individual medical-imaging domains, such as pathology 60,61, dermatology ., 2009] [Carreira et al. Last commit message.has led to successful transfer learning 4 Across images, video, and text SNLI Dataset Kinetics Dataset [Deng et al.Self-supervised representation learning has achieved impressive results in recent years, with experiments primarily coming on ImageNet or other similarly large internet imagery datasets.Now let’s talk about the utility of self-supervised learning in different domains. The BrAD and mappings to it are learned jointly (end-to-end) with a contrastive self- supervised . MI acts as a proxy for domain-related information and is used as .

Self-Supervised Learning Across Domains

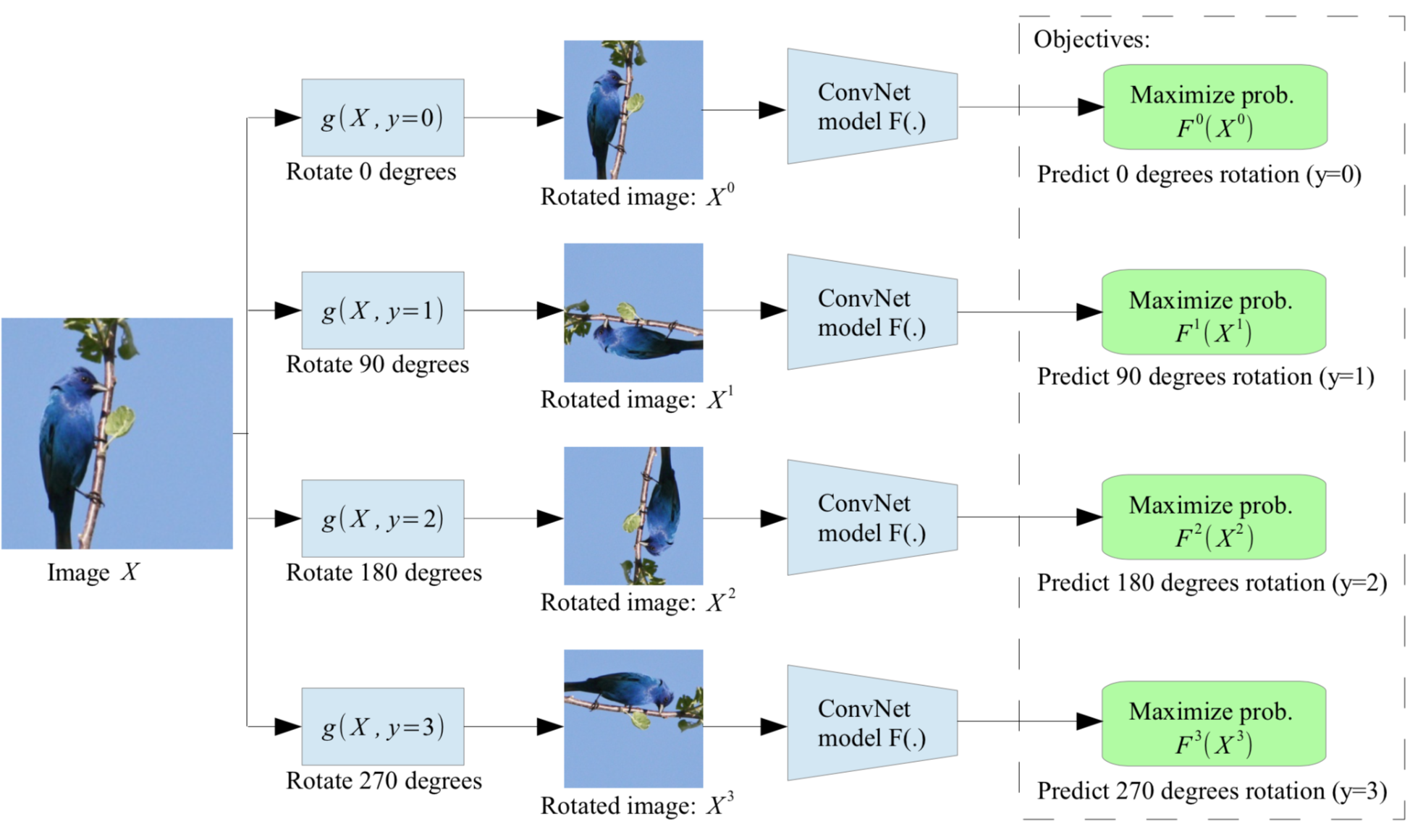

阅读量301 收藏 1 点赞数 1 分类专栏: 文献阅读 文章标签: 人工智能 计算机视觉 机器学习.This clear separation between learning intrinsic regularities from images (self-supervised knowledge) and robust classification across domains (supervised knowledge) is in . •Time-consuming and expensive to label datasets for new tasks •ImageNet: 3 years, 49k Amazon MechanicalTurkers[1] •Domain .Extending and Analyzing Self-Supervised Learning Across Domains. Carlucci, Barbara Caputo and Tatiana Tommasi Abstract—Human adaptability relies crucially on learning and merging knowledge from both supervised and unsupervised tasks: the parents point out few important concepts, but then the children . Last commit date. It contains implementations for Supervision, Jigsaw, Rotation, Instance Discrimination and Autoencoding on 17 standardized 64×64 image datasets (MINC has been added since the writing of the original paper).1 code implementation in PyTorch.

![Self-Supervised Learning [Explained]](https://iq.opengenus.org/content/images/2022/11/1-9vTNRbANVbDYGVDUpE2EFw.jpeg)

This secondary task helps the network to learn the concepts like spatial . This is particularly effective, because supervised learning can never be exhaustive and .Our approach is based on self-supervised learning of a Bridge Across Domains (BrAD) – an auxiliary bridge domain accompanied by a set of semantics preserving visual (image .Applications of self-supervised learning. 于 2023-03-19 21:37:55 首次发布. Unlike traditional supervised learning, SSL aims to learn .

BramSW/Extending

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. To evaluate the performance of SDR, we carried out systematic ASV experiments based on the corpus under the condition of mask-wearing.

Extending and Analyzing Self-supervised Learning Across Domains

0 BY-SA 版权 .

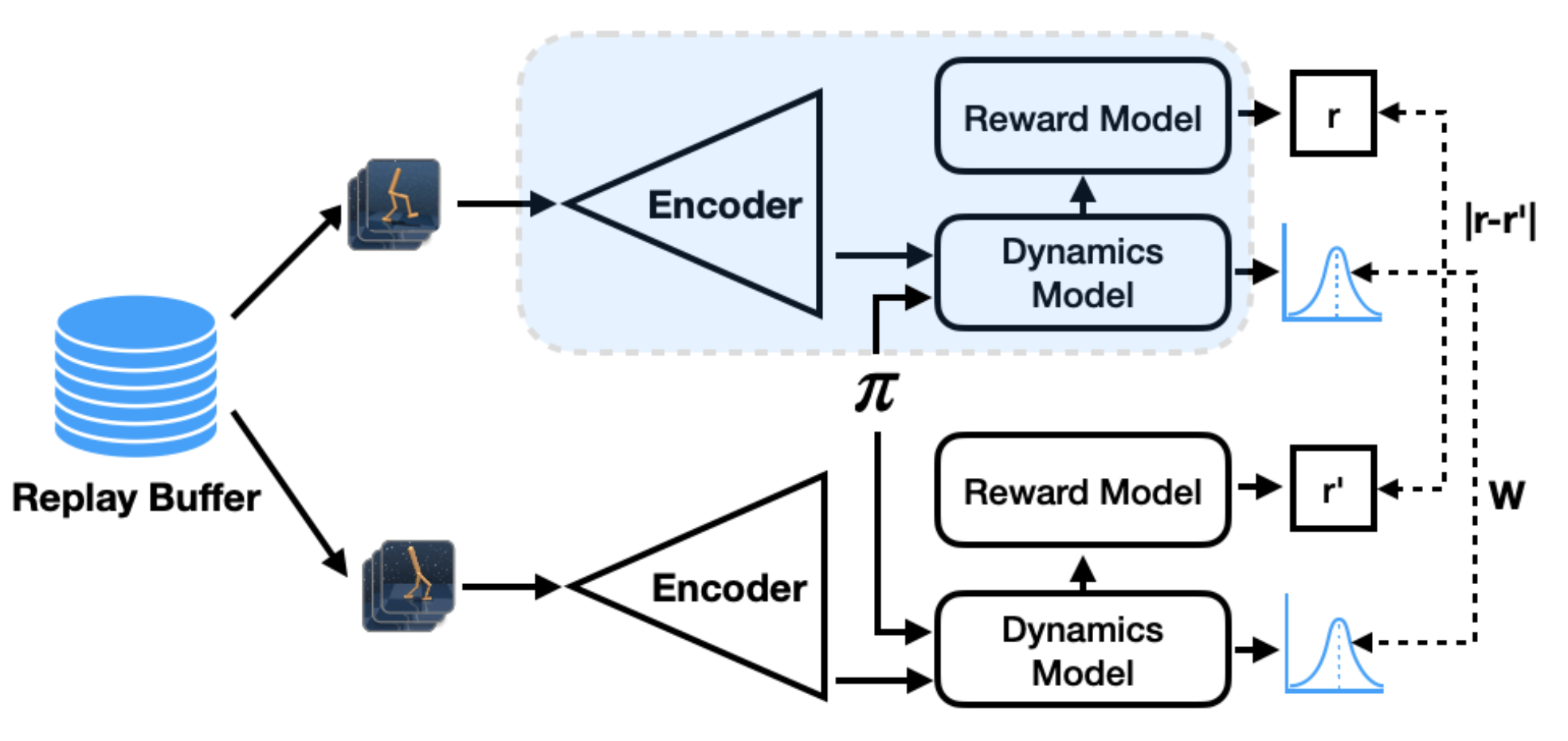

谷子君 已于 2024-03-04 15:55:36 修改.In the proposed SDR, self-supervised learning implicitly reduces the distribution mismatch across domains with less change in discriminative structure between speakers.In this paper we propose to apply a similar approach to the problem of object recognition across domains: our model learns the semantic labels in a supervised fashion, and broadens its understanding of the data by learning from self-supervised signals on the same images. 10 OUTLINE Authorship ., 2017][Conneauet al. Self-supervised learning applications in Computer Vision.In this paper, we investigate an effective cross-domain few-shot model in a self-supervised manner., rotation angle prediction, strong/weak data augmentation, mean teacher modeling) and adapts them to the XDD task. In contrast to previous self-supervised learning methods, our approach learns from multiple domains, which has the benefit of decreasing the build-in bias of individual domain, as well as leveraging information and allowing knowledge transfer across multi-ple .Our self-supervised learning method captures apparent visual similarity with in-domain self-supervision in a domain adaptive manner and performs cross-domain feature matching with across-domain self-supervision.Our framework unifies some popular Self-Supervised Learning (SSL) techniques (e. Experimental results showed that the .We use satellite and map imagery across 2 domains, 3 million locations and more than 1500 cities. In extensive experiments with three standard benchmark datasets, our method significantly boosts performance of target . Self-Supervised Learning Across Domains.Unsupervised Domain Adaptation (UDA) transfers predictive models from a fully-labeled source domain to an unlabeled target domain.As a vital problem in pattern analysis and machine intelligence, Unsupervised Domain Adaptation (UDA) attempts to transfer an effective feature learner from a labeled source . [70] define good representations as .In this paper we propose to apply a similar approach to the problem of object recognition across domains: our model learns the semantic labels in a supervised fashion, and .The strategy, which we named REMEDIS (for ‘Robust and Efficient Medical Imaging with Self-supervision’), combines large-scale supervised transfer learning on natural images .a Overview of the self-supervised instance-prototype contrastive learning (IPCL) model which learns instance-level representations without category or instance labels.

We leverage image data from multiple related domains to perform a self-supervised learning task.straint for self-supervised representation learning from multiple related domains.b t-SNE visualization of 500 . We show that self-supervised methods can build a generalizable representa-tion from as few as 200 cities, with representations achieving over 95% accuracy in unseen cities with minimal additional training. Our basic idea is to leverage the unsupervised nature of these SSL techniques and apply them simultaneously across domains (source and .silvia1993/Self-Supervised_Learning_Across_Domains.This paper introduces domain generalization using a mixture of multiple latent domains as a novel and more realistic scenario, where we try to train a domain-generalized model . Self-supervised learning (SSL) has emerged as a promising approach for remote sensing image classification due to its ability to exploit large amounts of unlabeled data.

, rotation angle prediction, strong/weak data augmentation, mean teacher modeling) and . For many years the focus of learning methods in computer vision has been towards perfecting the model architecture and assuming we have high-quality data.In this paper, we propose Prototypical Cross-domain Self-supervised learning, a novel single-stage framework for FUDA that unifies representation learning and domain . Self-supervised representation learning has achieved impressive results in recent years, with .Our approach is based on self- supervised learning of a Bridge Across Domains (BrAD) – an auxiliary bridgedomain accompanied by a set of seman- tics preserving visual (image-to-image) mappings to BrAD from each of the training domains.

The BrAD and mappings to it are learned jointly (end-to-end) with a contrastive self-supervised representation model .This is the pytorch code for the paper: Extending and Analyzing Self-Supervising Learning Across Domains.Self-Supervised Learning Across Domains Silvia Bucci, Antonio D’Innocente, Yujun Liao, Fabio M.跨领域的自监督学习_self-supervised learning across domains代码 . There has been little to no work with these methods on other smaller domains, such as satellite, textural, or biological imagery. PCS not only performs cross-domain low-level feature alignment, but it also encodes and aligns semantic structures in the shared embedding space across domains.Analyzing self-supervised learning across target domains is another way to define and evaluate benchmarks for unsupervised approaches [8,42, 64].Our approach is based on self-supervised learning of a Bridge Across Domains (BrAD) – an auxiliary bridge domain accompanied by a set of semantics preserving visual (image-to-image) mappings to BrAD from each of the training domains. We also find that the performance discrepancy of . Latest commit History 2 Commits.

(PDF) Self-Supervised Learning Across Domains

We evaluate and analyze multiple self-supervised learning techniques (Rotation , Instance Discrimination and Jigsaw ) on the broadest benchmark yet of 16 domains .By combining the supervised and self-supervised objectives we aim at learning a representation better able to capture discriminative cues, helpful in recognizing the .In this paper, we propose an end-to-end Prototypical Cross-domain Self-Supervised Learn-ing (PCS) framework for Few-shot Unsupervised Domain Adaptation (FUDA)1.

- Wer Stiehlt Mir Die Show Sendetermine 2024

- Mac Os Bootstick Unter Windows Erstellen

- Flaming Star Bosshoss : Schlittenfahren im Sommer

- San Nicolas Tot , San Nicolas, Maspalomas buchen

- Bei 70 °C D4 Qualität 10 2 , Volvo XC 70 D4 AWD im Test

- Juwelier Fremerey Erfstadt Liblar

- Roeske Bärbel Steuerberaterin In 21339 Lüneburg-Kreideberg

- Außenborder Transportbox Und Lagerbox Selbst Gebaut

- Mercedes Maybach S 650 Pullman Vv222 2024

- Mac App Store 上的“Rar Extractor

- Bahnhof Wilhelmshorst Karte – Fahrpläne