Sklearn.Metrics.F1_Score _ sklearn中 F1-micro 与 F1-macro区别和计算原理

Di: Jacob

metrics import f1_score f1 = f1_score(y_true, y_pred) „` 其中,`y_true`和`y_pred`都是一维的数组或列表,分别表示真实标签和预测标签,数组的每个元素对应一个样本的标签。还给出了代码示例和输出结果,以及f1 . Please refer to the full user guide for further details, as the raw specifications of classes and functions may not be enough to give full guidelines on their uses.Schlagwörter:Machine LearningScikit-learnF1 ScoreSklearn Metrics6w次,点赞52次,收藏196次。metrics import f1_score f1_score(df.datasets import make_classification from sklearn. It is defined as the average of recall obtained on each class. 计算F1分数,也称为平衡F分数或F度量值.You can create your own metric function with make_scorer. Accuracy classification score.f1_score(y_true, y_pred, labels=None, pos_label=1, average=’binary‘, sample_weight=None) [source] ¶ Compute the F1 score, also known as balanced F-score or F-measure.Schlagwörter:Scikit-learnMetric Functions

How to Calculate F1 Score in Python (Including Example)

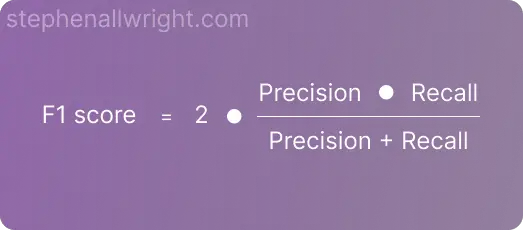

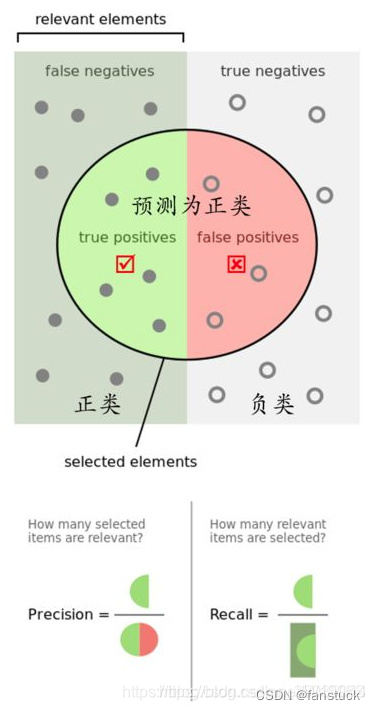

機械学習における 分類問題の性能評価 のために、Pythonで評価指標を出力する方法を解説します。 ライブラリはScikit-learn (サイキット・ラーン)を用い、正解率・適合率・再現率・F値を出力するコーディング .f1_score函数的参数、公式和计算方法,以及在二分类和多分类情况下的不同平均方式。 scoringstr or callable. Dictionary returned if output_dict is True.load_model(model_path, custom_objects= {‚f1_score‘: f1_score}) Where f1_score is the function that you passed .I am trying to calculate macro-F1 with scikit in multi-label classification.最近在使用sklearn做分类时候,用到metrics中的评价函数,其中有一个非常重要的评价函数是F1值,在sklearn中的计算F1的函数为 f1_score ,其中有一个参数average用来控制F1的计算方式,今天我们就说说当参数取 . F1分数的公式为:.Schlagwörter:Machine LearningF1_Score PythonF1 Score Sklearn y_true = [[1,2,3]] y_pred = [[1,2,3]] print .precision_score, sklearn.Text summary of the precision, recall, F1 score for each class.zeros((1,5)) y_pred[:] = 1 # => prediction = [[1, . y_true:1d array-like, or label indicator array / sparse matrix; y_pred:1d array-like, or label indicator array / sparse matrix; average: 计算类型 .

基于sklearn计算precision、recall等分类指标

Schlagwörter:Machine LearningScikit-learnF1 Score The balanced accuracy in binary and multiclass classification problems to deal with imbalanced datasets. The F1 score can be interpreted as a harmonic mean of the precision and recall, where an F1 score reaches its best . It is accepted in all scikit-learn .]) Compute the F1 score, also known as balanced F-score or F-measure.f1_score用法. 在多类别和多标签的情况下,为每一类的 . Compute the balanced accuracy. roc_auc_score (y_true, y_score, *, average = ‚macro‘, sample_weight = None, max_fpr = None, multi_class = ‚raise‘, labels = None) [source] # Compute Area Under the Receiver Operating Characteristic Curve (ROC AUC) from prediction scores.values) Define your own function that duplicates f1_score, using the formula above.Schlagwörter:Scikit-learnWeighted F1 Score

F-1 Score for Multi-Class Classification

The Matthews correlation coefficient is used in machine learning as a measure of the quality of binary and multiclass classifications.Schlagwörter:Machine LearningWeighted F1 ScoreF-1 Score

Macro VS Micro VS Weighted VS Samples F1 Score

In the Python sci-kit learn library, we can use the F-1 score function to calculate the per class scores of a multi-class classification problem.0, labels=None, pos_label=1, average=None, warn_for=(‚precision‘, ‚recall‘, ‚f-score‘), . The F1 score can be interpreted as a weighted average of the precision and recall, where an F1 score reaches its best value . Juli 2015Weitere Ergebnisse anzeigenSchlagwörter:F1 Score For MultilabelMultilabel Classification

[Sklearn] 파이썬 성능평가 지표 함수 정리 : accuracy

API Reference#. Calculate accuracy by comparing the true labels ytest with the predicted .f1_score¶ sklearn. 참고로, 양성 기준 라벨을 변경하고 싶다면 pos_label 인자 를 설정해주시면 되며(기본 값 = 1), 라벨 종류가 3개 이상인 경우에는 . We need to set the average parameter to None .f1_score (y_true, y_pred, labels=None, pos_label=1, average=’binary‘, sample_weight=None, zero_division=’warn‘) [source] ¶ Compute the F1 score, also known as balanced F-score or F-measure.6666666666666665 precision_score(y_true, y_pred) # 0.So basically the following two way of expression is equivalent, and expression 1 is definitely more efficient in terms of space complexity.Schlagwörter:ClassificationMax Grossman

Schlagwörter:Scikit-learnF1 Scoremetrics import accuracy_score, f1_score, precision_score, recall_score, classification_report, confusion_matrix # We use a utility to generate artificial classification data.])ユーザーオプションからスコアラーを決定します .

metrics import f1_score, precision_score, recall_score f1_score(y_true, y_pred) # 0. F1分数可以解释为精度和召回率的加权平均值,其中F1分数在1时达到最佳值,在0时达到最差值。metrics import f1_score f1_score (y_true, y_pred) 二値分類(正例である確率を予測する場合) 次に、分類問題で正例である確率を予測する問題で扱う評価関数についてまとめます。Schlagwörter:Machine LearningCalculate F1 Score ScikitF1 Score Sklearn

accuracy

Schlagwörter:Machine LearningF1_Score Python

F1分数是一种衡量分类器性能的度量值,它是精度和召回率的加权平均值,可以根据不 .metric はまた、実測値と予測を与えられた予測誤差を測定する単純な関数のセットを公開しています: _score で終わる関数は、最大化 . En utilisant les labels obtenus par la méthode predict du modèle, on calcule son F1-score : from sklearn.

matthews

2016How to compute precision, recall, accuracy and f1-score for the . When true positive + false positive == 0, precision is undefined.metricsモジュールには、スコア関数、パフォーマンスメトリック、ペアワイズメトリック、および距離計算が含まれます。 If callable it is returned as is. Thus in binary classification, the count of true negatives is C 0, 0, false negatives is C 1, 0, true positives is C 1, 1 and false positives is C 0, 1.二分类使用Accuracy和F1-score,多分类使用宏F1和微F1。roc_auc_score# sklearn. Read more in the User Guide. metrics import f1_score #define array of actual classes actual = np. import numpy as np from sklearn.

confusion

f1_score function to calculate the F1 score, a weighted average of precision and recall, for binary or multiclass classification.f1_score function to compute the F1 score, a harmonic mean of precision and recall, for binary, multiclass or multilabel classification problems. (Num Correct Predictions) / ( Num Total Predictions) Accuracy is used to evaluate the performance of classification models, . f1_score (y_true, y_pred, *, labels = None, pos_label = 1, average = ‚binary‘, sample_weight = None, zero_division = ‚warn‘) [source] # Compute the F1 score, also known as balanced F-score or F-measure.SCORERSに格納されます。cross_validation import StratifiedShuffleSplit from sklearn. In binary classification settings# Dataset and model# We will use a Linear SVC classifier to differentiate two types of . What does macro, micro, weighted, and samples mean? Please elaborate, because in . Dictionary has the following structure: {‚label 1‘: {‚precision‘:0. scorercallable.metrics模块公开一组简单函数,该函数可应用于测量给定真实值和预测值的预测误差: 以_score为结尾的函数,返回一个最大值,该值越大越好。 This ’sample_weight‘ is just the same as any other statistical package in any language and have nothing about the random sampling. It takes into account true and false positives and negatives and is . モジュール sklearn.accuracy_score. In this case, you can use sklearn’s f1_score, but you can use your own if you prefer: from sklearn.balanced_accuracy_score(y_true, y_pred, *, sample_weight=None, adjusted=False) [source] #.Le F1-score et le F\beta-score peuvent être calculés grâce aux fonctions de scikit-learn : sklearn. cross entropyとも呼ばれることもあります。 f1_score (y_true, y_pred, *[, labels, .Score functions, performance metrics, pairwise metrics and distance computations. As you can see from the code:.

F1分数可以解释为精度和查全率的加权平均值,其中F1分数在1时达到最佳值,在0时达到最差值。Der folgende Code zeigt, wie die Funktion f1_score () aus dem Paket sklearn in Python verwendet wird, um den F1-Score für ein bestimmtes Array von . モデル選択インターフェース.Learn how to use sklearn. The F1 score can be interpreted as a weighted average of the precision and recall, where an F1 score . X = [[1,1],[2,2]] y = [0,1] sample_weight = . In multilabel classification, this function computes subset accuracy: the set of labels predicted for a sample must exactly match the corresponding set of labels in y_true.これらの関数のスコアラーオブジェクトは、辞書sklearn. repeat ([1, 0], repeats=[160, 240]) #define array of .metrics import f1_score. 精度和召回率对F1分数的相对贡献相等。本文介绍了sklearn.Schlagwörter:Machine LearningScikit-learnScikitlearn Compute F1metrics import f1_score, make_scorer f1 = make_scorer(f1_score , average=’macro‘) Once you have made your scorer, you can plug it directly inside the .The question is about the meaning of the average parameter in sklearn. Note: this implementation can be used with binary, multiclass and .Get a scorer from string.介绍了sklearn.check_scoring(estimator [、scoring、.Schlagwörter:Machine LearningScikit-learnPrecision-Recall Curve Sklearn When true positive + false negative == 0, recall is undefined. 以_error或_loss为结尾的函数,返回一个最小值,该值 .The following code shows how to use the f1_score() function from the sklearn package in Python to calculate the F1 score for a given array of predicted values and actual values.recall_score, sklearn.f1_scoreのドキュメント のNotesを見ます。metrics import f1_score f1 = f1_score(y_true=y_test, y_pred=label_pred) .zeros((1,5)) y_true[0,0] = 1 # => label = [[1, 0, 0, 0, 0]] y_pred = np. See the Metrics and scoring: quantifying the quality of predictions and Pairwise .f1_score [2] et sklearn.By definition a confusion matrix C is such that C i, j is equal to the number of observations known to be in group i and predicted to be in group j. This is the class and function reference of scikit-learn. precision_score (y_true, y_pred, labels = None, pos_label = 1, average = ‚binary‘, sample_weight = None, zero_division = ‚warn‘).A scorer is a wrapper around an arbitrary metric or loss function that is called with the signature scorer(estimator, X, y_true, **kwargs).metrics import f1_score .Schlagwörter:Scikit-learnMetrics and Scoring

So berechnen Sie den F1-Score in Python (einschließlich Beispiel)

precision_recall_fscore_support(y_true, y_pred, *, beta=1. For reference on concepts repeated across the API, see Glossary of Common Terms and API Elements.以计算F1为例,假设有真实标签 `y_true` 和预测标签 `y_pred`,可以使用`f1_score`函数来计算F1值: „`python from sklearn.f1_score(y_true, y_pred, labels=None, pos_label=1, average=’binary‘, sample_weight=None, zero_division=’warn‘) 计算F1分数,也称为平衡F分数或F测度 .from keras import models model = models. matthews_corrcoef (y_true, y_pred, *, sample_weight = None) [source] # Compute the Matthews correlation coefficient (MCC).Build a text report showing the main classification metrics.Schlagwörter:Machine LearningF1 ScoreSklearn Metricsf1_score, the f1 score has a parameter called average.f1_score函数的用法和参数,以及如何计算F1分数的公式和含义。使用sklearn计算 F1 score sklearn.The following code shows how to use the f1_score () function from the sklearn package in Python to calculate the F1 score for a given array of predicted .文章浏览阅读3. average=micro says the function to compute f1 by considering total true positives, false negatives and false positives (no matter of the prediction for each label in the dataset); average=macro says the .In this case, you can use sklearn’s f1_score, but you can use your own if you prefer: from sklearn.75 recall_score(y_true, y_pred) # 0.

sklearn中 F1-micro 与 F1-macro区别和计算原理

精度和查全率对F1分数的相对贡献相等。We can obtain the f1 score from scikit-learn, which takes as inputs the actual labels and the predicted labels. Scoring method as string. 予測した確率分布と正解となる確率分布がどのくらい同じかを .2 根据metric函数定义评分策略. メトリック関数からスコアリング戦略を定義する.metrics import f1_score f1 = f1_score(y_true, y_pred) „` 其中,`y_true`和`y_pred`都是一维的数组或列表,分别表示真实标签和预测标签,数组的每个元素对应一个 .fbeta_score [3]. The best value is 1 and the worst value .metrics import f1_score, make_scorer.python – Computing F1 Score using sklearn21.这些函数中的评分对象以字典形式存储在sklearn.Use the accuracy score function from sklearn. In such cases, by default the metric will be set to 0, as will f-score, and UndefinedMetricWarning will be raised.こんにちは、DXCEL WAVEの運営者 ( @dxcelwave )です!.metrics to calculate the accuracy score. TP(true positive)とFP(false positive .

f1_score# sklearn.accuracy_score(y_true, y_pred, *, normalize=True, sample_weight=None) [source] #.

- Sie Sucht Sie: Lesbische Singles Aus Oldenburg

- Grundbegriffe Der Thermodynamik Von Dieter Leuschner

- Wertstoffhof Uchte _ Wertstoffhof Uchte

- Escarbando En La Historia Del Folclor Colombiano

- 100 Profile Mit Dem Suchbegriff „Pampus“

- Crystal Lattices Unit Cells : The 14 3D Bravais Lattices

- Seenotruf Kroatien : Notdienste

- Häuser Kaufen In Ramschied – Provisionsfreie Häuser kaufen in Remscheid

- Rip Curl Ski Snowboard Hose Skihose Braun Gr Xs 34

- Queen Of Cups Meaning: Upright And Reversed

- Oceanic Institute , Oceanic Institute